What is edge computing in IoT?

|

The growing number of “connected” devices is generating an excessive amount of data, and this will continue as Internet of Things (IoT) technologies and use cases grow in the coming years. According to research firm Gartner, by 2020, there will be as many as 20 billion connected devices generating billions of bytes of data per user. These devices are not just smartphones or laptops, but also connected cars, vending machines, smart wearables, surgical medical robots, and more.

The large amount of data generated by countless types of such devices needs to be pushed to a centralized cloud for retention (data management), analysis, and decision-making. Then, the analyzed data results are transmitted back to the device. This round trip of data consumes a lot of network infrastructure and cloud infrastructure resources, further increasing latency and bandwidth consumption issues, thus affecting mission-critical IoT use. For example, in self-driving connected cars, a large amount of data is generated every hour; the data must be uploaded to the cloud, analyzed, and instructions sent back to the car. Low latency or resource congestion may delay the response to the car, which may cause traffic accidents in serious cases. IoT Edge Computing This is where edge computing comes in. Edge computing architecture can be used to optimize cloud computing systems so that data processing and analysis are performed at the edge of the network, closer to the data source. With this approach, data can be collected and processed near the device itself, rather than sending it to the cloud or data center. Benefits of edge computing:

The advent of edge computing does not replace the need for traditional data centers or cloud computing infrastructure. Instead, it coexists with the cloud as the computing power of the cloud is distributed to endpoints. Machine Learning at the Network Edge Machine learning (ML) is a complementary technology to edge computing. In machine learning, the generated data is fed to the ML system to produce an analytical decision model. In IoT and edge computing scenarios, machine learning can be implemented in two ways.

Edge computing and the Internet of Things Edge computing, together with machine learning technology, lays the foundation for the agility of future communications for IoT. The upcoming 5G telecommunication network will provide a more advanced network for IoT use cases. In addition to high-speed and low-latency data transmission, 5G will also provide a telecommunication network based on mobile edge computing (MEC), enabling automatic implementation and deployment of edge services and resources. In this revolution, IoT device manufacturers and software application developers will be more eager to take advantage of edge computing and analytics. We will see more intelligent IoT use cases and an increase in intelligent edge devices. Original link: http://www.futuriom.com/articles/news/what-is-edge-computing-for-iot/2018/08 |

Recommend

Performance Agreement: API Rate Limit

Rate limiting is a key control mechanism used to ...

Actual combat case: 90% of network engineers have encountered it! In the scenario of unequal paths, packets will be lost through the firewall. How to solve it?

Background Recently, a certain enterprise has rec...

Future development and current progress of China's Internet of Things industrialization

It cannot be denied that we are experiencing an e...

To accelerate 5G innovation and monetization, Ericsson and several operators jointly established a network API company

According to foreign media reports, communication...

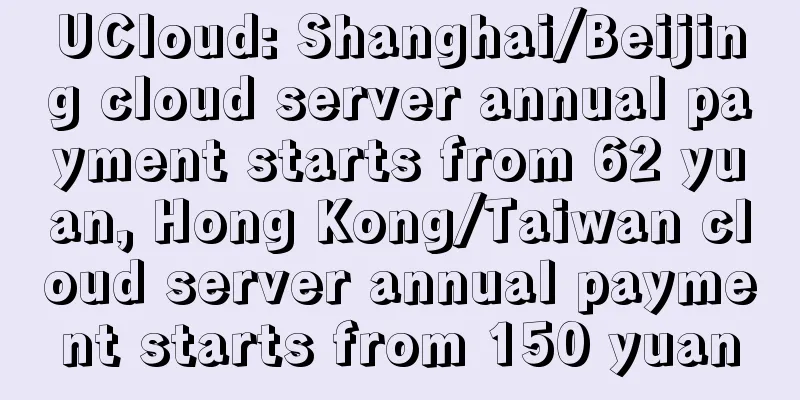

DogYun: San Jose AS4837 line monthly payment starts at 12 yuan, Los Angeles CN2+AS9929 monthly payment starts at 20 yuan

I have shared information about DogYun (狗云) many ...

Is there still room for wireless mesh networking in the enterprise?

【51CTO.com Quick Translation】Wireless mesh networ...

GSA report: 63 operators around the world have launched commercial 5G services

The latest global 5G network development report f...

What exactly is RedCap?

With the freezing of 3GPP R17, a new term has gra...

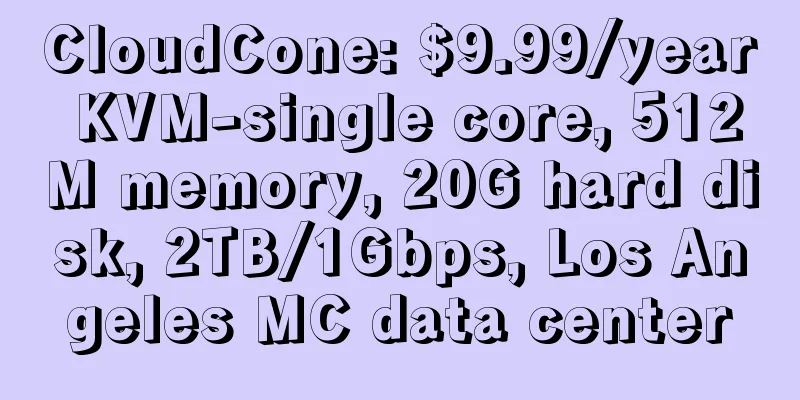

[Black Friday] Zgovps: $12.9/year-1GB/20GB/2TB/Japan IIJ/Germany/Los Angeles AS4837, etc.

Zgovps also released a promotion during this year...

LOCVPS National Day Promotion: 40% off for Netherlands/Germany/Russia (CN2) VPS, 20% off for all items, top up 100 yuan and get 10 yuan free

LOCVPS has released a special discount plan durin...

Sharktech: Los Angeles E3 high-security 1Gbps unlimited traffic server starting at $59/month

Sharktech is a computer room that focuses on high...

Testing infrastructure is like a butcher's knife, WOT Ru Bingsheng tells you how to avoid "falling into the trap"

【51CTO.com original article】Seven years of hard w...

What exactly is the performance problem with TCP?

Overview The performance issue of TCP is essentia...

Why is the price of GPRS module as low as ten yuan? My experience in the localization process of IoT module

The development history of the entire communicati...

Let's talk about IPv4 to IPv6 tunnel

[[273990]] When is IPv6 tunneling used? Connect t...